On Cloud Labs: Experimental power vs compute power

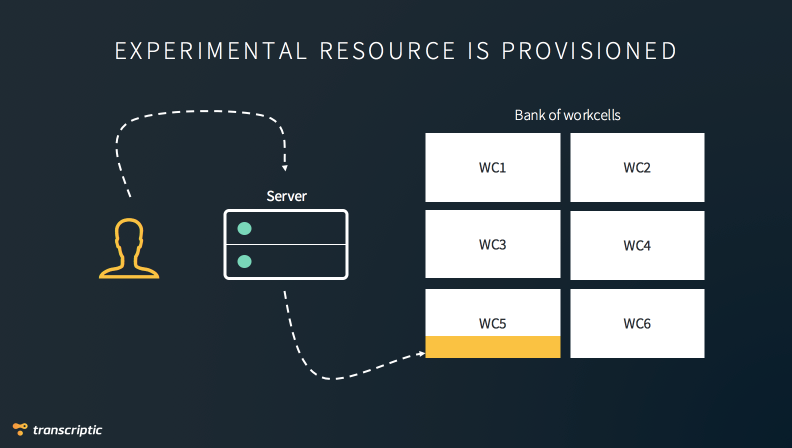

At a recent workshop I gave in London at SynBioBeta 2016 I said the phrase “Then experimental resource is dynamically provisioned to execute your experiment.” I’ve never really considered experimental resource or power as a thing before Transcriptic but it describes the concept well and fits into the cloud lab / cloud computing analogy.

Experimental resource is basically an abstract combination of instrument capability and availability to undertake a set of instructions to complete a protocol. So for instance say I had 96 wells to PCR I would only need to provision 1 thermocycler for the duration of the PCR experiment. Say I had 2*96 wells, a system could accommodate this by provisioning 2 thermocyclers in serial or in parallel to complete the protocol. At Transcriptic multiple thermocyclers across multiple workcells can be provisioned to execute a protocol.

The analogy to AWS EC2

When using amazon web services I can ‘spin up’ a virtual machine of varying compute power. So for some basic programming in the cloud I could just create a low spec microinstance however if I want to process some large data I could ‘spin up’ a Amazon elastic map reduce cluster to process the job efficiently and I could scale this at whim to run faster or cost less. This is the provisioning of compute power on demand to execute a user task.

At Transcriptic the user doesn’t yet have the ability to specify the scale at which experimental resource is provisioned to them however I think that could be very interesting future. Currently the Transcriptic platform determines the magnitude of experimental power required for the experiment and provisions that to the user’s run.

User control over execution environment

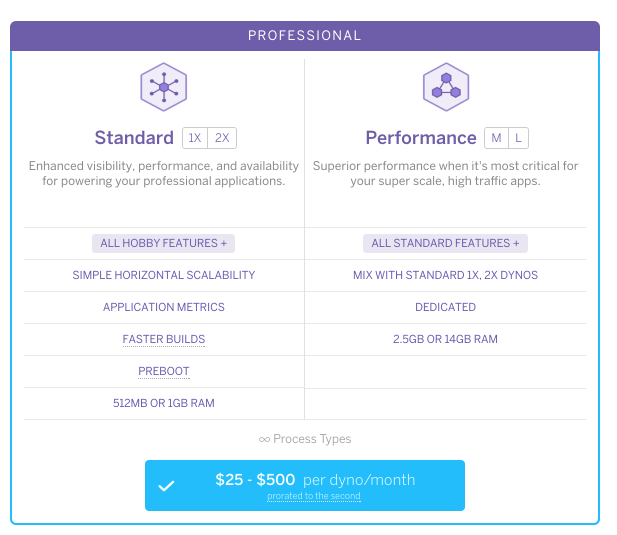

Execution environment is a really interesting subject for cloud labs. Could we provide low cost but slow environments for users to run experiments or high performance environments for extremely fast, highly accurate execution of experiments at a premium?

Well potentially the answer is yes however fundamentally biological materials are time sensitive, unlike a dataset on Amazon S3 that won’t degrade over time (on day to day timescales anyway!). There are potentially other ways to provide variable cost resources as well. For example error is an inescapable property of doing science however the range of error is something that can be quantified and generally controlled for. With this in mind it might be possible to provide lower cost instances of experimental power that possess higher error ranges and conversely, premium experimental power that possesses very low error on steps such as liquid handling.

Containment level is another execution environment variable that I think is relevant as well. As a cloud lab user I need to be able to specify if I want my experiment running in a BSL-1 or BSL-2 environment, or if I am doing some RNA-seq library prep maybe I want to specify my protocols to be executed in RNA free environments. Of course this can be determined by the cloud lab provider however I think for the true adoption of a cloud computing-like cloud lab future, users should be able to specify these environments.

Experimentation for free in the cloud

To extrapolate the cloud computing analogy, free execution environments are provided by Heroku and AWS EC2, these are generally slow, low compute power, evanescent instances. What does this look like for cloud labs?

Currently there are some boundaries to free cloud instances. Where compute power is directly tied to Moore’s law, experimental power is not tied to just one commodity and therefore can’t benefit from huge cost reductions. That said, the fields of automation and robotics continue to benefit from the continually lowering costs of electronic and mechanical components.

Materials often play a big part in why experiments can’t be free of charge. For a run on Transcriptic hardware it is not uncommon for the majority of the price to come from the reagents being used in the run such as the DNA polymerase or an enzyme substrate. A component of the cost of these reagents is often to cover the licensing fees of the underlying IP covering the reagent. In terms of sophisticated analytical hardware made by third party manufacturers like Thermo or Bio-Rad there is rarely any significant pricing pressure to decrease the costs of these instruments. All together these factors can add up to basic threshold of cost that is difficult to lower.

The takeaway

I love working in this space because there is so much opportunity to define the future. In the case of cloud labs there are many new concepts that need to be explored and defined. Experimental power is a concept useful for Transcriptic to convey how our users experiments are executed, however I hope this concept can become better defined and transformed into a commodity where users can control how much experimental power they have access to. The best thing is nothing is set in stone yet so all of this is up for debate and refinement.

Thanks to Max Hodak for feedback on this post.